I’ve noticed something interesting about the current hype around LLMs. When the average user talks about them, they usually aren’t talking about the actual models themselves like GPT, PaLM, LaMDA, or Llama. They’re usually talking about the chatbots that they power—ChatGPT, Bard and Bing Chat, just to name a few.

There’s an important distinction to make there; the former is the brains of the operation, and like a brain, its thoughts are hidden from someone on the outside. What people see and interact with is the face of the operation.

You could even go so far as to call it an “inter-face”.

Dubious etymology aside, that brings me to my second realisation. Why is the chatbot the most common user interface of LLMs?

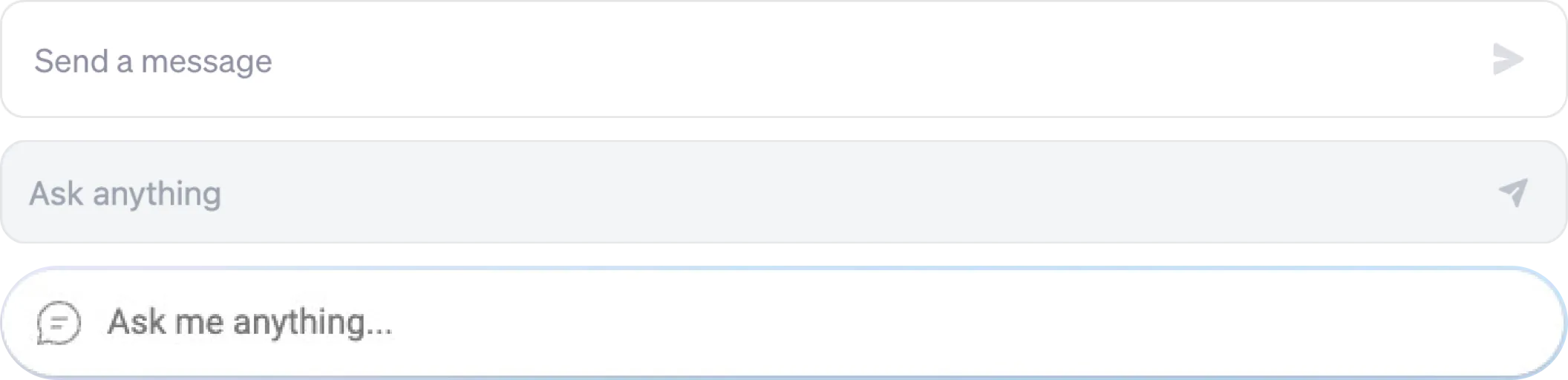

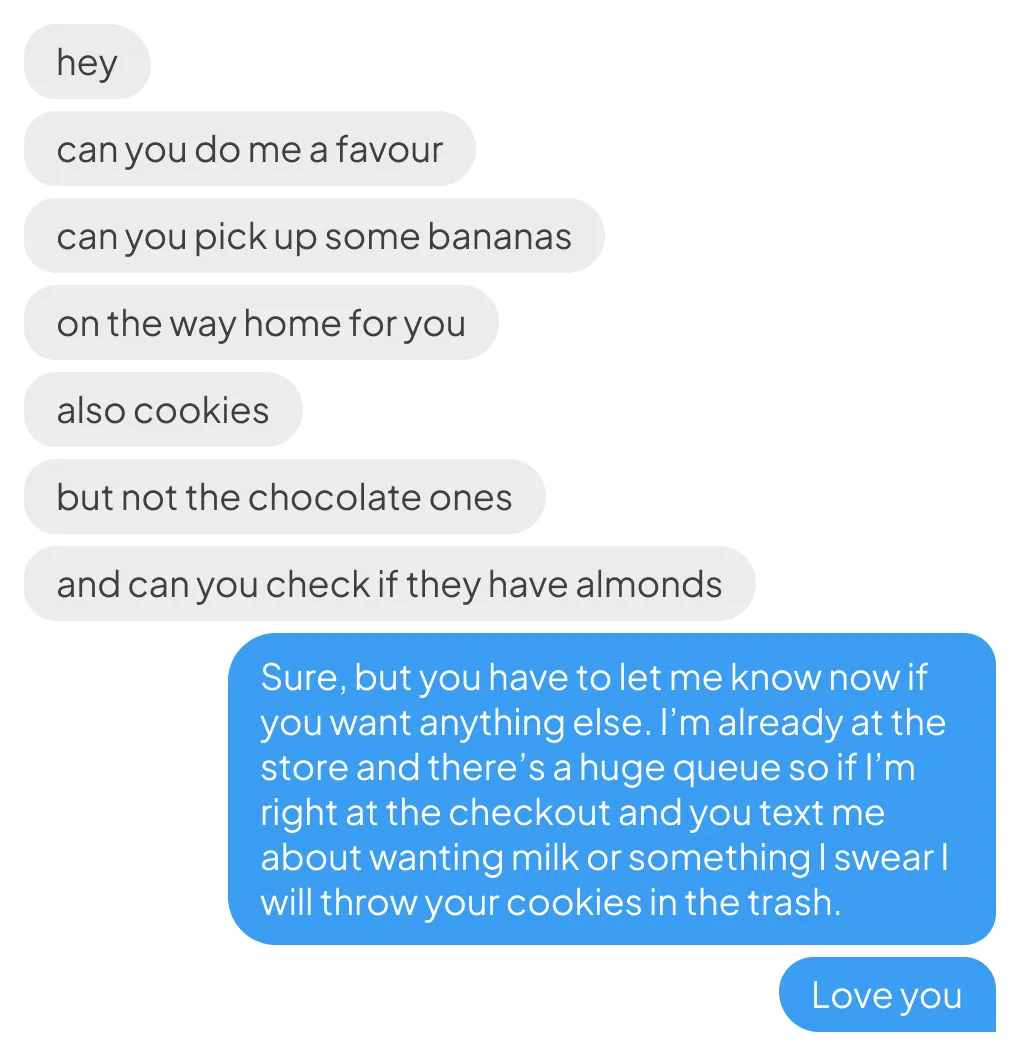

”Send a message”. “Ask anything”. “Ask me anything”.

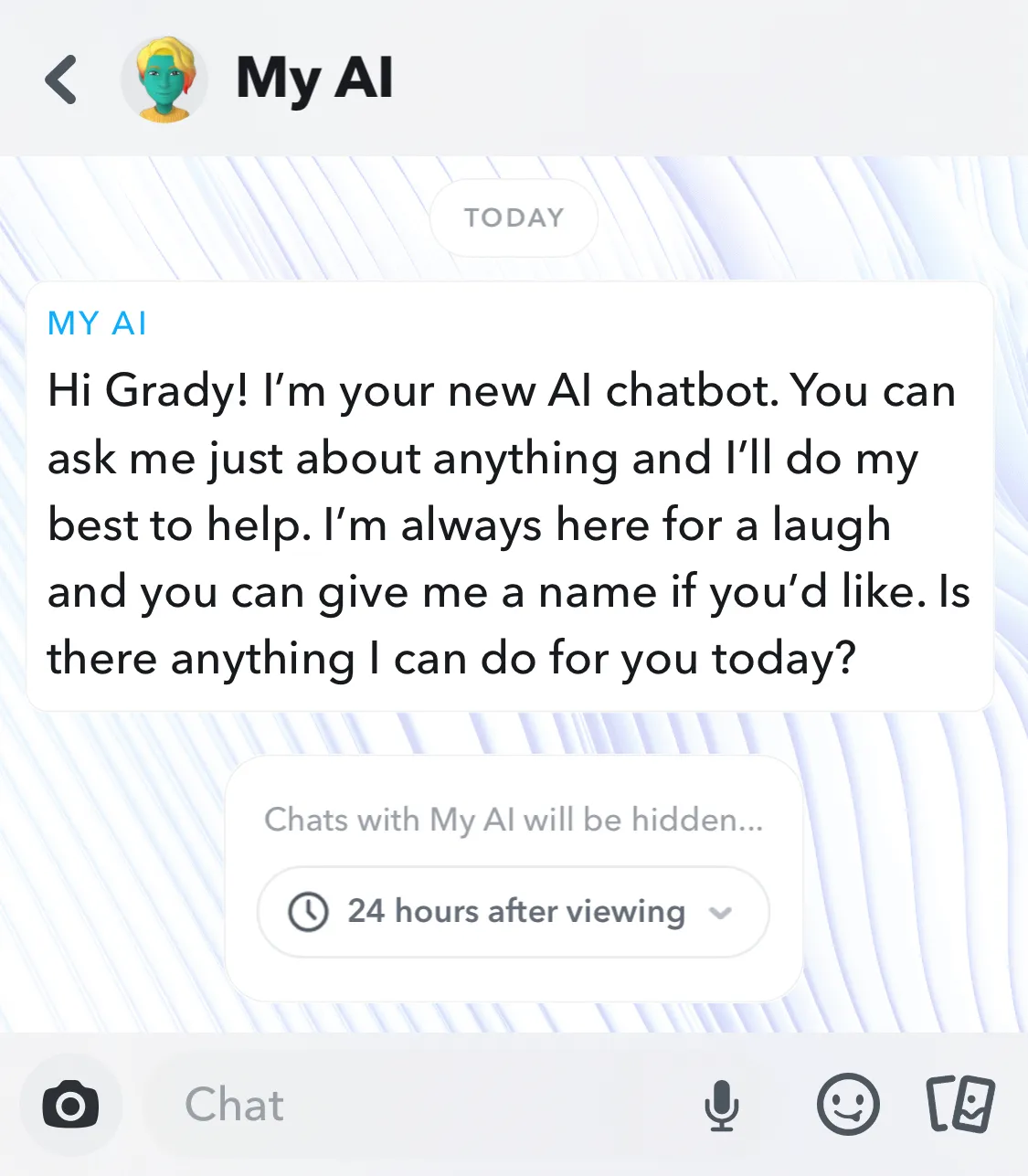

They all invite the user to use natural language to chat with a machine. Snapchat takes it a step further, with the My AI interface being virtually indistinguishable from a regular chat.

It’s not just chat inputs either. There are also icons, chat bubbles, avatars, and if you’re using ChatGPT, Bing Chat, or HuggingChat, it’s even there in the name. All of which reinforces the idea that LLMs are for chatting with.

There’s nothing wrong with those UI elements themselves; in fact, having those motifs and patterns is an example of good UI design. If you want your users to chat with the LLM, it makes sense to design a chat interface. It’s self-explanatory, intuitive, and usable.

The issue I have with this trend, however, lies in the broader user experience of that interaction. I don’t think we’ve paid enough attention to the nuances of using a conversation to metaphorize interactions with LLMs, and that’s going to lead to huge problems that go beyond usability.

But first, let’s talk about why this default interface came about in the first place.

Conversational user interfaces

The conversational user interface (CUI) has been around for a while. It’s a natural way of framing an interaction with a lot of back-and-forth Just like a real conversation, although I have been quite a few one-sided ones to dispute this. and inevitably became the standard for instant messaging platforms, from the earliest IRC clients to modern-day Telegram, WhatsApp, and the like.

Its use expanded beyond there, and for good reason. CUIs in something as ubiquitous as instant messaging built a set of user expectations that were transferable; designers could make a new system instantly intuitive by evoking that same context of a human conversation, even if a human wasn’t on the other end.

Between a human and a machine, it created a linear and guided experience compared to a graphical interface and excelled in cases where the actions available were too many to show all at once, or had to be done in a specific order.

At least that’s what was theoretically possible. In reality, the chances are that you’ve probably had the misfortune of wrangling a chatbot for customer support or trying to find the magic words for a voice assistant that refuses to understand You know which one you are. .

It’s here that we find a problem: the CUI is deceptively versatile. What might feel like a natural design decision may actually backfire when user expectations of a conversational interface don’t quite transfer.

Expectations versus reality

What exactly is it about an interface that makes it intuitive? There are too many theories to list, but one definition I’ve found helpful And not just because it’s the definition I based my college thesis on. is that something feels intuitive when the user’s mental model of the system (conscious or otherwise) matches up with the actual model of the system. Or in other words, when the system behaves as you expect it to.

The CUI created a strong set of user expectations for how a system using it should feel – just like a regular conversation. That gap between the user’s and system’s model was minimal in the context of instant messaging; after all, you were indeed communicating with a human on the other end, just with some hand-wavey action-at-a-distance magic in between.

With early chatbots, the gap was a little bit wider than acceptable for an intuitive user experience. Many felt like a thin wrapper around what amounted to an FAQ, except far more annoying to use. Others that did use some form of language processing as pioneered by ELIZA still felt artificial and canned, mostly repeating after the user or giving generic answers Which was precisely what they were doing, by the way. .

Simply put, these early chatbots were just not smart. They couldn’t live up to user expectations of an actual conversation despite having an interface that suggested one. The dissonance was obvious and jarring, and that led to experiences that felt mechanical at best, and frustrating at worst.

Chatbots powered by LLMs, on the other hand, feel extremely smart I’m anthropomorphizing here, but technicalities of LLMs just being really good statistical models aside, this is how the average user would perceive them. . Their problem isn’t that they can’t live up to the expectations of a conversation; it’s that they seemingly meet those expectations while subverting them entirely.

To reuse the metaphors on brains and faces, the difference between early and LLM chatbots is like the difference between putting a human face on the brain of a worm vis-à-vis Get it? A French pun on “face”? Nevermind. the brain of well… an LLM.

One is dumb, and the other is smart. Neither are remotely close to human.

The apparent intelligence of the LLM is just not the kind of intelligence the average user is expecting. Deep down, the underlying model of the system still doesn’t match up with the expectations of the user.

Confidently incorrect

LLMs have their quirks and flaws, the most egregious of which is that they lie ”Lie” is a strong word, and doesn’t quite capture the actual nature of the flaw, but it’s nevertheless effective. Simon Willison makes a great argument for the word. , and they’re really good at it. This idea of being “confidently incorrect” is one of the biggest warnings going around about LLMs at the moment, and has been causing quite a bit of stir.

This isn’t a problem new to chatbots; part of the frustration with those early chatbots came from the fact that they were confidently and stubbornly responding with nonsense. But the technical capabilities of an LLM combined with the UX of a conversational interface means that there is no reason for a user to suspect anything is wrong. The veil is peeled back slightly in the occasional moments where LLMs get too stubborn with an obvious lie (especially when it comes to math), but for a majority of casual users, the LLM has become an extremely knowledgeable and creative chat partner, one that isn’t expected to lie.

Instead of exposing the flaws of the system, the CUI gives the LLM the legitimacy of a conversational equal, and more often than not, the authority of a knowledgeable source.

Here is also where the problem goes deeper into the ethics of LLM-powered applications. If we continue relying on this design pattern as-is for LLMs, the only user expectation that we will build is distrust and aversion, when people who assume them to be superpowered search engines find out the hard way that it isn’t the case.

We either need to build LLMs that can’t lie, or design interfaces that don’t suggest honesty.

The former is impossible by design. The hallucinations that we perceive as lies are also what makes LLMs creative, rather than being a mere search engine. There are ways to mitigate hallucinations and constrain the LLMs to existing facts, but it isn’t foolproof and will come with tradeoffs in predictive value.

That leaves us with the other half of the equation; if we can’t change the system model, we need to change the user’s model of how they imagine the system behaves. That’s the realm of UX design and a huge question that will take more than a single post to explore, and we’ve not even mentioned LLM interfaces that aren’t chatbots.

Closing the gap

Realigning user expectations to better match the actual behaviour of the LLM is a daunting task, but keep in mind that we don’t have to confer a precise technical understanding of LLMs to everyone who uses them. The neural networks that power LLMs are effectively a black box even to their engineers, much less the average user.

What we need is an accurate understanding. One that’s good enough to shift their expectations towards something more reflective of how LLMs operate, even if that understanding is not fully fleshed out down to the technical details.

The conversational UI as it currently stands gives uses an extremely precise understanding on the interaction (after all, it’s a conversation). This was an accurate one for the context of instant messaging, but as we’ve seen so far, it’s off the mark for the context of LLMs.

We don’t have to give up the conversational UI entirely, though. Even the most minor UI details have the potential to greatly influence a user’s perception, and that’s what UX design is for, and what it should be figuring out.

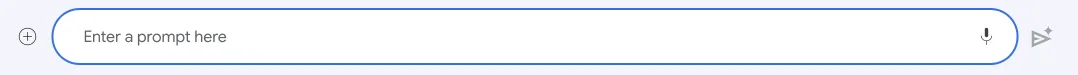

Take an example that I sneakily withheld from the initial screenshots at the start of this post: Google Bard’s chat input:

“Enter a prompt here”. Not quite chatty and a little bit technical, and most definitely not what you’d use with a person. Whether an intentional design choice or not, it makes all the difference in reframing the same interaction as one that isn’t quite a conversation with human intelligence, but something a little different.

Instead of a natural conversation with a human-like counterpart, “Enter a prompt here” suggests something a little exploratory, a little scientific about this interaction. It doesn’t invite the user to chat with the LLM; one could go so far as to say that it places the user in the position of an experimenter, writing instructions to prompt a response from a test subject.

And that’s what a single line of text can do. We’ve not even talked about bigger elements of UI design yet!

One other extremely subtle bit of interesting UI design I’ve seen is how ChatGPT displays its responses.

Here’s a quick example, slightly slowed down so the action is visible:

It looks like ChatGPT is typing, and there’s even a longer pause at “create” as if the chatbot were actually thinking.

Compare that to Bard, which takes a moment of silent thinking before showing a full, final response.

I’d argue that both evoke human conversation. And I have the perfect analogy for the difference between ChatGPT and Bard’s design:

Within the context of a chat conversation, it seems like ChatGPT’s typing style is the former, suggesting something a little more haphazard and stream-of-consciousness, like someone too trigger-happy with the send button. Bard feels a bit more well thought-out and researched, taking a moment to refine its output into something coherent, maybe even something correct.

Both mirror the experience and expectations of instant messaging; you probably know actual humans that do both, but I argue that ChatGPT does so in a helpful way because it actually evokes something about talking to a human that is also accurate to GPT: fallibility.

That subtle difference in how to display an LLM’s output makes a huge impact on how that output is received by the user and how it is factored into their own decision-making. After all, which human would you trust more: someone who immediately starts rattling, or someone who comes back with a full answer to your question?

This isn’t to say that Bard’s design isn’t reflective of its system; one of its key differences from ChatGPT is that it does have access to the internet, and that perhaps makes its UI design a little bit more appropriate. Nevertheless, it still warns you in bold about its shortcomings Another form of more explicit UI that we’ll have to explore another time. :

One more interesting thing about ChatGPT’s typing. It closes the gap between expectations and behaviour not just in a metaphorical way, but in a technical way, too.

You might think that whoever designed its interface wanted to intentionally emulate the experience of human interaction, even going so far as to code in random longer pauses to evoke thinking. ChatGPT itself even claims as much…

I’m programmed to simulate the experience of seeing text being typed out in real-time to make our conversation feel more natural and interactive.

…except that ChatGPT lied (typical).

What GPT doesn’t seem to be aware of, is that ChatGPT uses an event stream for its output, displaying tokens as they are generated by the LLM. Simply put, what you’re seeing up there is actually the GPT model working in real-time. That typing animation isn’t a separate design choice to emulate human interaction, but an actual artefact of the system model.

It’s likely a happy accident, but that’s what I think makes this piece of UI design so good. It’s not only suggestive of the right conversation context, but it is also transparent about the behaviour of the model, effectively showing how it generates responses on the fly. This may not be immediately obvious to someone without technical knowledge about LLMs, but it still does something to temper expectations on a subconscious level.

That’s all to say that we don’t have to abandon the CUI entirely; we just need to find the right implementation of it for the right kind of language model. We can choose to lean into other aspects of conversation beyond the well-trodden path, and potentially close the gap between the system and the user instead of widening it.

That could mean leaning into specific and appropriate aspects of human conversation, or shifting away from it entirely. What’s important is that the design decision is deliberate and informed. If what we’ve covered so far really is a mere happy accident of just having to create a front-end for an LLM, imagine what we could do if we started actually designing them with intention.

Final thoughts

The huge boom in the technical progress of LLMs is fascinating and impressive, but we’ve not talked enough about how interactions with them are designed, and that is a problem. We need to design a face for LLMs that fit their brains, and that means being more intentional about how we use CUIs, if at all.

Otherwise, we leave users with an inaccurate perception of the systems they interact with. When it comes to LLMs, this isn’t just a huge usability problem, but also one of ethics and safety. Our overreliance on a decades-old design pattern will create problems on both fronts if we don’t also think about how it needs to evolve with the technology behind it.